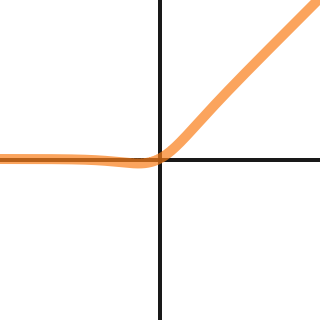

We train fully connected networks of different depths on MNIST with 512 neurons each layer. We conduct a similiar experiment to the one performed in the paper mentioned before. Best viewed in color.įirst of all, we want to prove that E-swish has also the ability to train deeper networks than Relu, as shown for Swish in Ramachandran et al., 2017. Values were obtained by fitting the coordinates of each point in a grid to a 6-layer, with 128 neurons each, randomly initialized neural network. Figure 5: 3D projection of the output landscape of a random network. As a reference, we also plot the landscape of the Elu activation function (Clevert et al., 2015 ) since it has been proved to provide a faster learning than Relu, and its slope is higher too. Therefore, we can infer that, probably, E-swish will show a faster learning than Relu and Swish as the parameter β increases. We found that the higher the parameter β, the higher the slope of the loss landscape. If we plot the output landscapes in 3D, as can be seen in Figure 5, it can be observed that the slope of the E-swish landscape is higher than the one in Relu and Swish. As can be seen in Figure 4, E-swish output landscapes also have the smoothness property. In the original Swish paper, the improvements associated with the Swish function were supposed to be caused by the smoothness of the Swish output landscape, which directly impacts the loss landscape. The network is composed of 6 layers with 128 neurons each and initialized to small values using the Glorot uniform initialization (Glorot & Bengio, 2010 ).įigure 4: Output landscape for a random network with different activation functions. Inspired by the original Swish paper, we plot below the output landscape of a random network, which is the result of passing a grid in form of points coordinates. For that reason, we conduct our experiments with values for the parameter β in the range 1 ≤ β ≤ 2. However, choosing a large β may cause gradient exploding problems. We also believe that multiplying the original Swish function by β❱ accentuates its properties. However, we believe that the particular shape of the curve described in the negative part, which gives both Swish and E-swish the non-monotonicity property, improves performance since they can output small negative numbers, unlike Relu and Softplus. The fact that the gradients for the negative part of the function approach zero can also be observed in Swish, Relu and Softplus activations. Despite of this, we believe that the non-monotonicity of E-swish favours its performance. It’s very difficult to determine why some functions perform better than others given the presence of a lot of confounding factors. E-swish is just a generalization of the Swish activation function (x*sigmoid(x)), which is multiplied by a parameter β:

In this paper, we introduce a new activation function closely related to the recently proposed Swish function (Ramachandran et al., 2017 ), which we call E-swish. However, none of them have managed to replace Relu as the default activation for the majority of models due to inconstant gains and computational complexity (Relu is simply max(0, x)). Since then, a variety of activations have been proposed (Maas et al., 2013 Clevert et al., 2015 He et al., 2015 Klambauer et al., 2017 ). The introduction of the Rectifier Linear Unit (ReLU) (Hahnloser et al., 2000 Jarrett et al., 2009 Nair & Hinton, 2010 ), allowed the training of deeper networks while providing improvements which allowed the accomplishment of new State-of-the-Art results (Krizhevsky et al., 2012 ). The problem came when the depth of the networks started to increase and it became more difficult to train deeper networks with these functions (Glorot and Bengio, 2010). Initially, since the first neural networks where shallow, sigmoid or tanh nonlinearities were used as activations. A correct choice of activation function can speed up the learning phase and it can also lead to a better convergence, resulting in an improvement on metrics and/or benchmarks. The election of the activation function has a notorious impact on both training and testing dynamics of a Neural Network.

0 kommentar(er)

0 kommentar(er)